Solution

- Use Cases:

- Consider the situation where a number of orders have been added to a job chain. These orders should then be serialized to guarantee that each order has completed the job chain before the next order starts.

- Consider the situation that a number of job chains makes use of the same resource, e.g. by access to the same objects in a database. These orders should be serialized to guarantee that only one order of one job chain can access the resource at the same time. This use case applies e.g. to NoSQL databases that provide limited data consistency and therefore require consistency to be provided at access level by a JobScheduler.

- Requirements

- The solution implements resource locks that serialize access

- for orders of any number of job chains,

- for any number of orders of the same job chain,

- for orders that are added to any job node in a job chain.

- Resource locks are persistent, i.e. they are restored to the same state after a JobScheduler restart.

- Resource locks can by acquired by job chains that are executed

- with JobScheduler Agent instances including multiple Agents for job nodes of the same job chain (cross-platform).

- with JobScheduler Master instances running standalone or in a Passive Cluster or Active Cluster.

- Resource locks can be monitored and can manually be removed with the JOC GUI should the need arise.

- The solution implements resource locks that serialize access

- Delimitation

- Limiting the number of concurrent orders in a job chain by use of the

<job_chain max_orders="..."/>attribute works exclusively for the first job node. The solution offered by this article works optionally on any job node in a job chain. - This solution is focused on

- locks for complete job chains. For locks at job (task) level see Locks.

- exclusive locks, shared locks are not considered.

- Persistence of resource locks is limited to a predefined duration. The default value is 24 hrs. and can be configured for individual needs.

- Limiting the number of concurrent orders in a job chain by use of the

- Solution Outline:

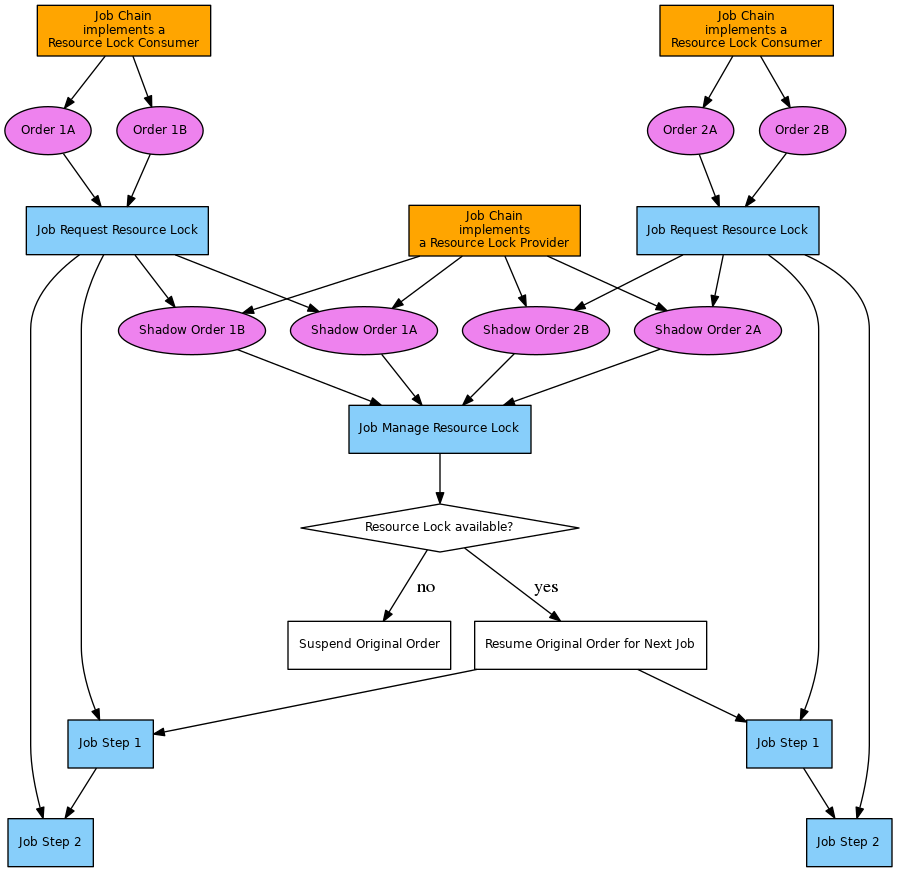

- The solution implements two parts represented by job chains:

- Resource Lock Provider implements a job chain that accepts shadow orders for serialized access to resources. The job chain guarantees that only one order at a time is granted a resource lock. The job chain will suspend orders in originating job chains as long as a resource lock is blocked and will continue such orders when the resource lock becomes available. Any number of orders of the same or of different job chains can use the Resource Lock Provider job chain for serialization.

- Resource Lock Consumer implements a sample job chain that makes use of a resource that should be accessed exclusively by one order at a time.

- The solution implements two parts represented by job chains:

- References

- SourceForge Forum Discussion Thread

- JS-402 - Getting issue details... STATUS

Download

- Download resource_lock_provider.zip

- Download resource_lock_consumer.zip

- Extract the archives to the

./config/livefolder of your JobScheduler installation. - The archive extracts the files to the folders

resource_lock_providerandresource_lock_consumerrespectively. - You can store the

resource_lock_consumerfiles in any folder as you like, however, if you move theresource_lock_providerfiles to some other location then you will have to adjust settings in theresource_lock_consumerobjects.

Pattern

Implementation

Job: request_resource_lock

- This job can be used by resource lock consumers as the first job node in a job chain.

- The job has no special job script implementation.

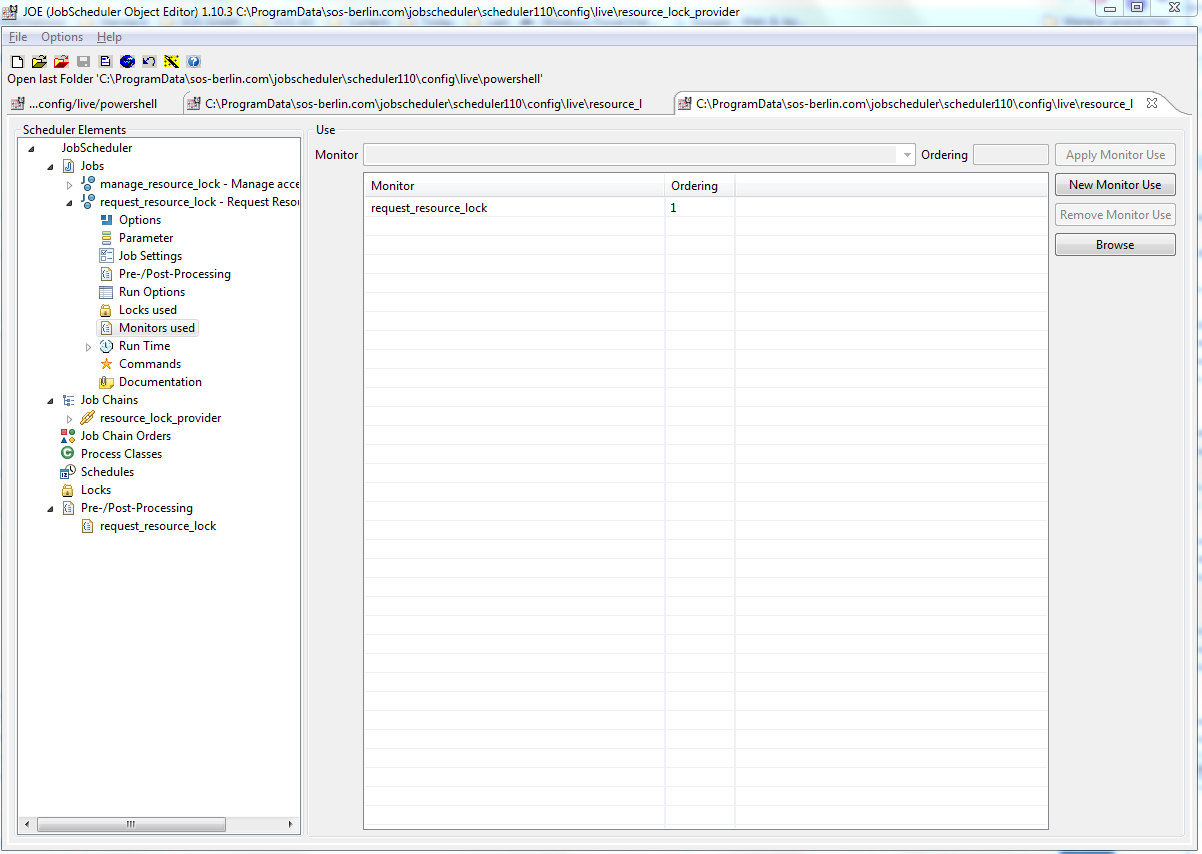

- The job makes use of the Monitor

request_resource_lock.

Monitor: request_resource_lock

- This Monitor implements a pre-processing script for jobs that request resource locks.

- The monitor is used by the above

request_resource_lockjob and can be assigned to any individual job node of a job chain that requires a resource lock.

Job: manage_resource_lock

- This job provides the handling of resource locks.

- For incoming shadow orders that have been created by the

request_resource_lockmonitor the originating orders are resumed if the resource lock is not blocked by a different order.. - Shadow orders are repeatedly checked by this job by use of a setback interval.

- Shadow orders are completed if the originating order has completed the job chain or has been removed.

- For incoming shadow orders that have been created by the

Configuration

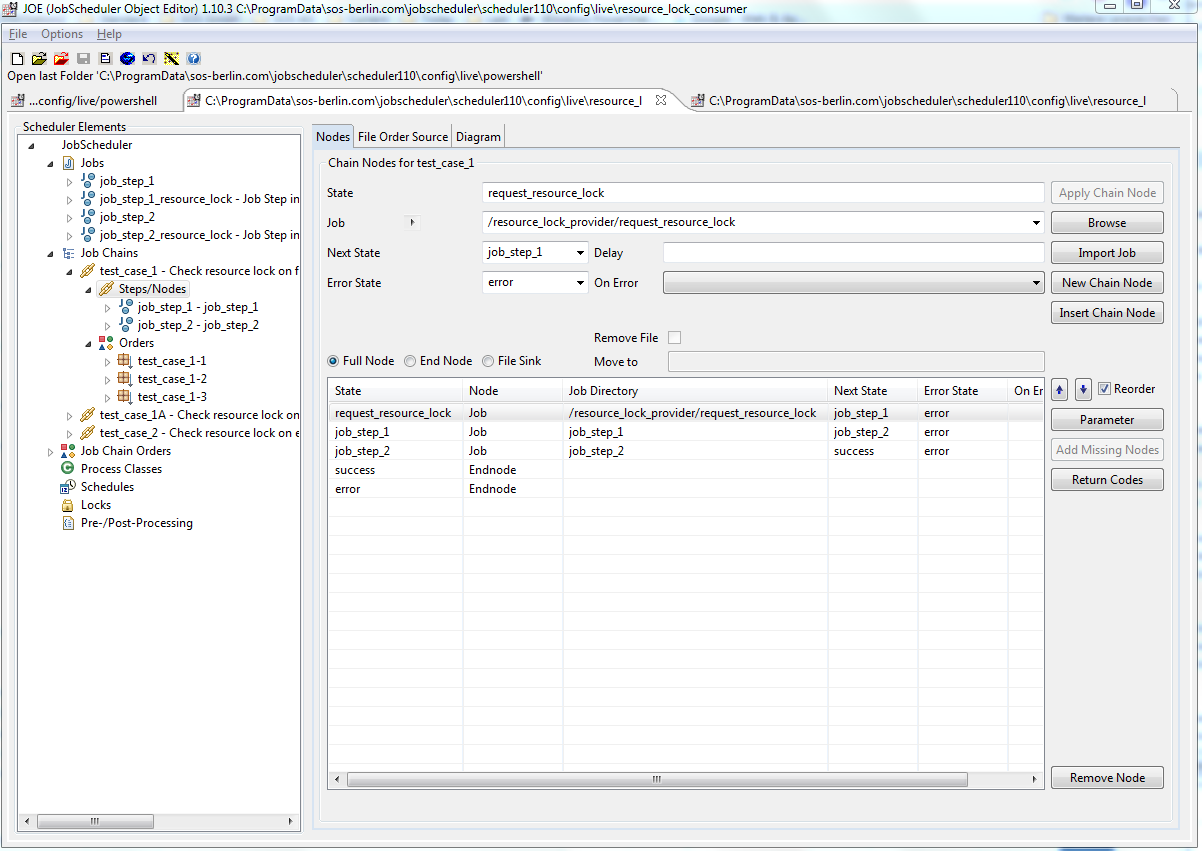

- Use the sample job chain

test_case_1from the Resource Lock Consumer sample or any individual job chain and assign the job/resource_lock_provider/request_resource_lockas the first job node of the job chain. This configuration will guarantee that all newly created orders that are added to the begin of the job chain will be started after any predecessor orders completed the job chain.

- Should resource lock consistency be required for later job nodes in a job chain, i.e. if orders are created not for the begin of a job chain but for later job nodes, e.g. when using split job nodes, then assign the monitor

/resource_lock_provider/request_resource_lockto the respective jobs:

- The repeat interval for which resource locks are checked is configured with the job

manage_resource_lockof the resource lock provider.- The interval is 60s per default

- The maximum number of retries is 1440 which defaults to a lifetime of 24 hrs. for a resource lock.

- The configuration is provided like this:

Hints

- When requesting a resource lock by use of the

request_resource_lockmonitor then- a delay of 2s is applied for the

manage_resource_lockjob to process the shadow order. A minimum delay is required to guarantee synchronization. - for shell jobs an error message will be added to the order log when the order is initially suspended. This message is not harmful and can be ignored. For API jobs no errors or warnings are raised. We recommend that you use the

request_resource_lockjob as a first job node of your job chain and use the monitor for subsequent job nodes only.

- a delay of 2s is applied for the

- The lifetime of a resource lock is limited by the duration that is covered with the setback interval of the

manage_resource_lockjob that defaults to 24 hrs. - If you make frequent use of resource locks then you should consider to pre-load the

manage_resource_lockjob by use of the setting<job min_tasks="1"/>which results in the job to react immediately to incoming orders without having to load a JVM for each execution of this job. - When operating the Resource Lock Provider job chain in a different location than the directory

./config/live/resource_lock_providerthen the following settings have to be adjusted:- From the Resource Lock Provider the

request_resource_lockmonitor script specifies the path of the provider job chain. - From the samples for the Resource Lock Consumer

- the job chains

test_case_1andtest_case_1Aboth assign the job/resource_lock_provider/request_resource_lockas the first job node. - the job chain

test_case_2makes use of jthe obsjob_step_1_resource_lockandjob_step_2_resource_lockthat are assigned the monitor location in/resource_lock_provider/request_resource_lock.

- the job chains

- From the Resource Lock Provider the

Usage

The following test cases are available with the Resource Lock Consumer sample job chain.

Test case 1: Check resource lock on first job step

- The job chain

test_case_1makes use of the/resource_lock_provider/request_resource_lockjob as the first job node. - No parameterization is used, therefore the scope of the resource lock is the current job chain that is forced to process order sequentially.

- Adding multiple orders to this job chain causes all orders to be processed only after predecessor orders completed the job chain.

- Start the orders

test_case_1-1, ..., test_case_1-3by use of JOC. - Add any number of new orders by use of JOC with the Add Order context menu.

- Start the orders

- The job chain

Test case 1A: Lock job chains

- The job chain

test_case_1Ais a copy oftest_case_1but makes use of the same resource lock astest_case_1. - The orders

test_case_1A-1, ..., test_case_1A-3use the parameterresource_lockwith the value/resource_lock_consumer/test_case_1therefore forcing orders for both job chains to be processed sequentially:- Start the orders

test_case_1-1, ..., test_case_1-3by use of JOC that will run for job chaintest_case_1. - Start the orders

test_case_1A-1, ..., test_case_1A-3by use of JOC that will run in job chaintest_case_1Abut are synchronized with orders of job chaintest_case_1.

- Start the orders

- The job chain

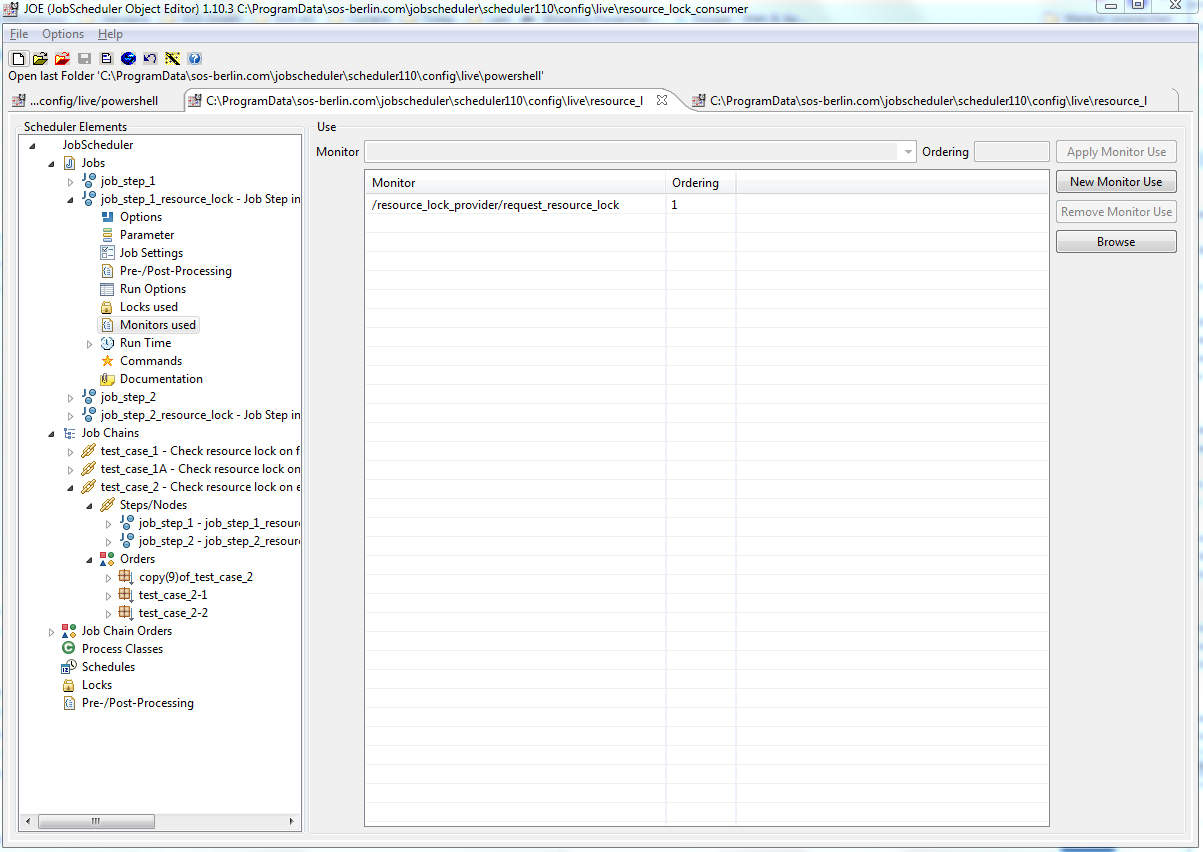

Test case 2: Check resource lock on each job step

- This test case is designed to prevent parallel execution of orders in a job chain independent from the job node that orders are started for.

- The job chain

test_case_2makes use of the jobsjob_step_1_resource_lockandjob_step_2_resource_lock. Both jobs are assigned therequest_resource_lockmonitor. The above-mentioned initial job noderequest_resource_lockis not used as the individual jobs make use of the resource lock monitor. - Adding multiple orders to this job chain causes orders to behave sequentially as for test case 1:

- Start the orders

test_case_2-1, ..., test_case_2-3by use of JOC. - Add any number of new orders by use of JOC with the Add Order context menu.

- Start the orders