Introduction

- JOC Cockpit comes preinstalled with a Docker® image.

- Before running the JOC Cockpit container the following requirements should be met:

- Either the embedded H2® database should be used or an external database should be available and accessible - see the JS7 - Database article for more information.

- Docker volumes are created with persistent JOC Cockpit configuration data and log files.

- A Docker network or similar mechanism is available to enable network access between JOC Cockpit, Controller instance(s) and Agents.

- Initial operation for JOC Cockpit includes:

- registering the Controller instance(s) and Agents that are used in the job scheduling environment.

- optionally registering a JS7 Controller cluster.

Installation Video

This video explains the installation of JOC Cockpit for Docker® containers.

Pulling the JOC Cockpit Image

Pull the version of the JOC Cockpit image that corresponds to the JS7 release in use:

docker image pull sosberlin/js7:joc-2-2-0

Running the JOC Cockpit Container

After pulling the JOC Cockpit image you can run the container with a number of options such as:

#!/bin/sh

docker run -dit --rm \

--hostname=js7-joc-primary \

--network=js7 \

--publish=17446:4446 \

--env="RUN_JS_JAVA_OPTIONS=-Xmx256m" \

--env="RUN_JS_USER_ID=$(id -u $USER):$(id -g $USER)" \

--mount="type=volume,src=js7-joc-primary-config,dst=/var/sos-berlin.com/js7/joc/resources/joc" \

--mount="type=volume,src=js7-joc-primary-logs,dst=/var/log/sos-berlin.com/js7/joc" \

--name js7-joc-primary \

sosberlin/js7:joc-2-2-0

Explanation:

--networkThe above example makes use of a Docker network - created, for example, using thedocker network create js7command - to allow network sharing between containers. Note that any inside ports used by Docker containers are visible within a Docker network. Therefore a JOC Cockpit instance running for the inside port4446can be accessed with the container's hostname and the same port within the Docker network.--publishThe JOC Cockpit has been configured to listen to the HTTP port4446. An outside port of the Docker host can be mapped to the JOC Cockpit inside HTTP port. This is not required for use with a Docker network, see--network, however, it will allow direct access to the JOC Cockpit from the Docker host via its outside port .--env=RUN_JS_JAVA_OPTIONSThis allows any Java options to be injected into the JOC Cockpit container. Preferably this is used to specify memory requirements of JOC Cockpit, for example, with-Xmx256m.--env=RUN_JS_USER_IDInside the container the JOC Cockpit is operated by thejobscheduleruser account. In order to access, for example, log files created by the JOC Cockpit, which are mounted to the Docker host it is recommended that you map the account that is starting the container to thejobscheduleraccount inside the container. TheRUN_JS_USER_IDenvironment variable accepts the user ID and group ID of the account that will be mapped. The example above makes use of the current user.--mountThe following volume mounts are suggested:config: The optional configuration folder allows specification of individual settings for JOC Cockpit operation, see the chapters below and the JS7 - JOC Cockpit Configuration Items article. Without this folder the default settings are used.logs: In order to make JOC Cockpit log files persistent they have to be written to a volume that is mounted for the container. Feel free to adjust the volume name from thesrcattribute. However, the value of thedstattribute should not be changed as it reflects the directory hierarchy inside the container.Docker offers a number of ways of mounting or binding volumes to containers including, for example, creation of local directories and binding them to volumes like this:

Example how to create Docker volumes# example to map volumes to directories on the Docker host prior to running the JOC Cockpit container mkdir -p /home/sos/js7/js7-joc-primary/config /home/sos/js7/js7-joc-primary/logs docker volume create --driver local --opt o=bind --opt type=none --opt device="/home/sos/js7/js7-joc-primary/config" js7-joc-primary-config docker volume create --driver local --opt o=bind --opt type=none --opt device="/home/sos/js7/js7-joc-primary/logs" js7-joc-primary-logs

There are alternative ways how to achieve this. As a result you should have a access to the directories

/var/sos-berlin.com/js7/joc/resources/jocand/var/log/sos-berlin.com/js7/jocinside the container and data in both locations should be persistent. If volumes are not created before running the container then they will be mounted automatically. However, you should have access to data in the volumes, e.g. by access to/var/lib/docker/volumes/js7-joc-primary-configetc.

Installing Database Objects

JOC Cockpit requires a database connection, see JS7 - Database.

The database connection is configured before initial start of the JOC Cockpit container.

The following chapters can be skipped when using the H2® embedded database to evaluate JS7. Here it is sufficient to have the Hibernate configuration ready as this database runs in pre-configured mode inside the container.

Check the JDBC Driver

JS7 ships with a number of JDBC Drivers.

- JDBC Drivers for use with H2®, MariaDB®, MySQL®, Oracle®, PostgreSQL® are included with JS7.

- For details about JDBC Driver versions see the JS7 - Database article.

- Should you have a good reason to use a different version of a JDBC Driver then you can apply the JDBC Driver version of your choice.

- For details about JDBC Driver versions see the JS7 - Database article.

- For use with Microsoft SQL Server®

- the JDBC Driver has to be downloaded by the user as it cannot be bundled with open source software due to license conflicts.

- You can download JDBC Drivers from vendor's sites and store the resulting *.jar file(s) in the following location:

- Location in the container:

/var/sos-berlin.com/js7/joc/resources/joc/lib - Consider accessing this directory from the volume that is mounted when running the container, e.g. from a local folder

/home/sos/js7/js7-joc-primary/config/lib. - Refer to the JS7 - Database article for details about the proceeding.

- Location in the container:

Configure the Database Connection

In a first step users have to create a database schema and account for JS7. The database schema has to support the Unicode character set.

- For examples how to set up the database see JS7 - Database.

In a second step the database connection has to be specified from a Hibernate configuration file:

- Location in the container:

/var/sos-berlin.com/js7/joc/resources/joc/hibernate.cfg.xml - Consider accessing the configuration file from the volume that is mounted when running the container, for example, from a local folder

/home/sos/js7/js7-joc-primary/config. - Information about modification of the

hibernate.cfg.xmlfile for your DBMS can be found in the JS7 - Database article.

Create Database Objects

During installation JOC Cockpit can be configured

- not to create database objects by the installer,

- to create database objects by the installer,

- on start-up.to check if database objects exist and otherwise to create them on-the-fly.

Users can force creation of database objects by executing the following script inside the container::

# create database objects docker exec -ti js7-joc-primary /bin/sh -c /opt/sos-berlin.com/js7/joc/install/joc_install_tables.sh

Explanation:

docker exec -tiis the command that connects to the JOC Cockpit containerjs7-joc-primary.js7-joc-primaryis the name of the JOC Cockpit container as specified in thedocker runcommand mentioned above./bin/sh -cruns a shell inside the container and executes a script to install and to populate the database objects required for operation of JOC Cockpit.- Note that there is no harm in re-running the script a number of times as it will not remove existing data from the database.

Log Files

Access to log files is essential to identify problems during installation and operation of containers.

When mounting a volume for log files as explained above you should have access to the files indicated with the JS7 - Log Files and Locations article.

- The

jetty.logfile reports about initial start up of the servlet container. - The

joc.logfile includes e.g. information about database access.

Performing Initial Operation

For initial operation, the JOC Cockpit is used to make Controller instances and Agent instances known to your job scheduling environment.

General information about initial operation can be found in the following article:

Additional information about initial operation with containers can be found below.

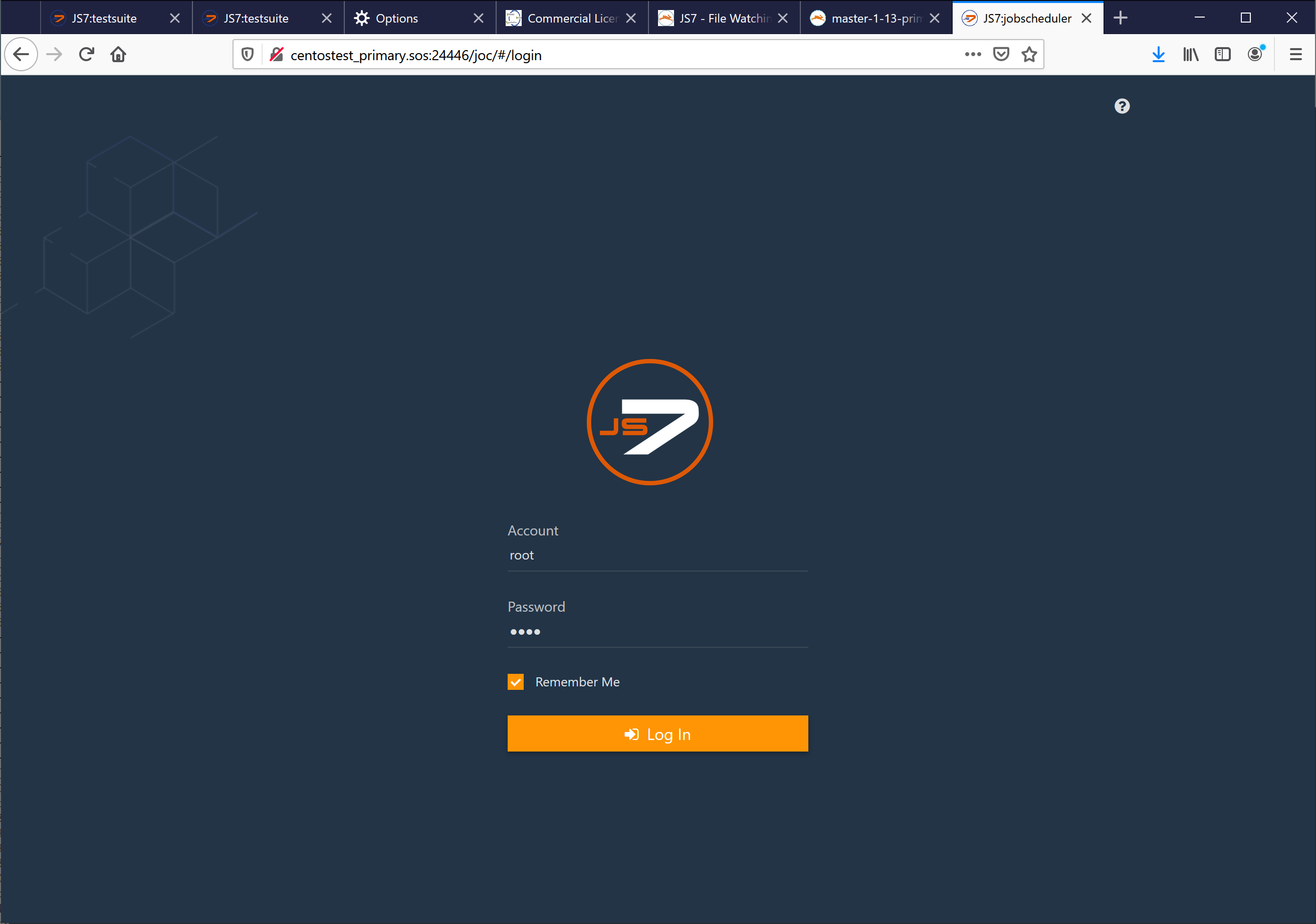

Accessing JOC Cockpit from your Browser

Explanatios:

- For the JOC Cockpit URL: in most situations you can use the host name of the Docker host and the port that was specified when starting the container.

- From the example above this could be

http://centostest_primary.sos17446ifcentostest_primary.soswere your Docker host and17446were the outside HTTP port of the container. - Note that the Docker host has to allow incoming traffic to the port specified This might require adjustment of the port or the creation of firewall rules.

- From the example above this could be

- By default JOC Cockpit ships with the following credentials:

- User Account:

root - Password:

root

- User Account:

Register Controller and Agents

After login a dialog window pops up that asks for the registration of a Controller. You will find the same dialog later on in the User -> Manage Controllers/Agents menu.

You have a choice of registering a Standalone Controller or registering a Controller Cluster for high availability (requires JS7 - License.

Register Standalone Controller

This registration dialog allows a Standalone Controller or a Controller Cluster to be specified.

Use of the Standalone Controller is within the scope of the JS7 open source license.

Explanation:

- You can add a title to the Controller instance that will be shown in the JS7 - Dashboard View.

- The URL of the Controller instance has to match the hostname and port used to operate the Controller instance.

- Should you use a Docker network then all containers will "see" each other and all internal container ports will be accessible within the network.

- In the above example a Docker network

js7was used and the Controller container was started with the hostnamejs7-controller-primary. - The port

4444is the inside HTTP port of the Controller that is visible in the Docker network.

- In the above example a Docker network

- If you are not using a Docker network then you are free to decide how to map hostnames:

- The Controller container could be accessible from the Docker host, i.e. you would specify the hostname of the Docker host.

- The outside HTTP port of the Controller container, which is specified with the

--publishoption when starting the Controller container has to be used.

- Should you use a Docker network then all containers will "see" each other and all internal container ports will be accessible within the network.

Register Controller Cluster

A Controller cluster implements high availability for automated fail-over if a Controller instance is terminated or becomes unavailable.

Note that the high availability clustering feature is subject to JS7 - License. Without a license, fail-over/switch-over will not take place between Controller cluster members.

Explanation:

- This Primary Controller instance, Secondary Controller instance and Agent Cluster Watcher are specified in this dialog.

- You can add a title for each Controller instance that will be shown in the JS7 - Dashboard View.

- Primary and Secondary Controller instances require a URL as seen from JOC Cockpit.

- In addition, a URL can be specified for each Controller instance to allow it to be accessed by its partner cluster member.

- Typically the URL used between Controller instances is the same as the URL used by JOC Cockpit.

- Should you operate e.g. a proxy server between Primary and Secondary Controller instances then the URL for a given Controller instance to access its partner cluster member might be different from the URL used by JOC Cockpit.

- The URL of the Controller instance has to match the hostname and port that the Controller instance is operated on.

- Should you use a Docker network then all containers will "see" each other and all inside container ports are accessible within the network.

- In the above example a Docker network

js7was used and the Primary Controller container was started with the hostnamejs7-controller-primary. The Secondary Controller was started with the hostnamejs7-controller-secondary. - The port

4444is the inside HTTP port of the Controller instance that is visible in the Docker network.

- In the above example a Docker network

- IF you are not using a Docker network then you are free to how decide how to map hostnames:

- The Controller container could be accessible from the Docker host, i.e. you would specify the hostname of the Docker host.

- The outside HTTP port of the Controller instance has to be used that was specified with the

--publishoption when starting the Controller container.

- Should you use a Docker network then all containers will "see" each other and all inside container ports are accessible within the network.

- The Agent Cluster Watcher is required for operation of a Controller cluster. The Agent is contacted by Controller cluster members to verify the cluster status should no direct connection between Controller cluster members be available.

- Note that the example above makes use of an Agent that by default is configured for use with HTTP connections.

- For use of the Agent's hostname and port the same applies as for Controller instances.

Register Agents

After the connection between JOC Cockpit and the Controller is established you can add Agents like this:

Explanation:

- For each Agent a unique identifier is specified, the Agent ID. The identifier remains in place for the lifetime of an Agent and cannot be modified.

- You can add a name for the Agent that will be used when assigning jobs to be executed with this Agent. The Agent name can be modified later on.

- In addition you can add alias names to make the same Agent available under different names.

Further Resources

Configuring the JOC Cockpit

Note that it is not necessary to configure the JOC Cockpit - it runs out-of-the-box. The default configuration specifies that:

- HTTP connections are used which expose unencrypted communication between clients and JOC Cockpit. Authentication is performed by hashed passwords.

Users who intend to operate a compliant and secure job scheduling environment or who wish to operate JOC Cockpit as a cluster for high availability are recommended the JS7 - JOC Cockpit Configuration for Docker Containers article series.

Build the JOC Cockpit Image

User who wish to create their own individual images of the JOC Cockpit can find instructions in the JS7 - JOC Cockpit Build for Docker Image article.